For autonomous vehicles (AVs) to travel safely, they require up-to-date, high-definition maps that accurately reflect their changing surroundings. If not continually revised, these maps quickly become outdated, increasing the risk of accidents and stalling the development of fully self-driving vehicles.

Dr. Gaurav Pandey, associate professor in the Department of Engineering Technology and Industrial Distribution (ETID), received a grant from Ford Motor Company to push the boundaries of current technology.

At his Computer Vision and Robotics Lab in ETID’s Multidisciplinary Engineering Technology program, Pandey is focused on creating the most optimal maps in the most optimal way to make highly and fully automated transportation part of daily life.

Some people believe AVs can safely navigate from point A to point B relying solely on cameras, just as humans use their eyes. However, Pandey points out that humans utilize their vision in conjunction with mental maps formed in their minds. These mental maps are automatically updated when conditions change, such as during construction. Today’s mapping technology for AVs is costly and offers no easy way to update in real-time.

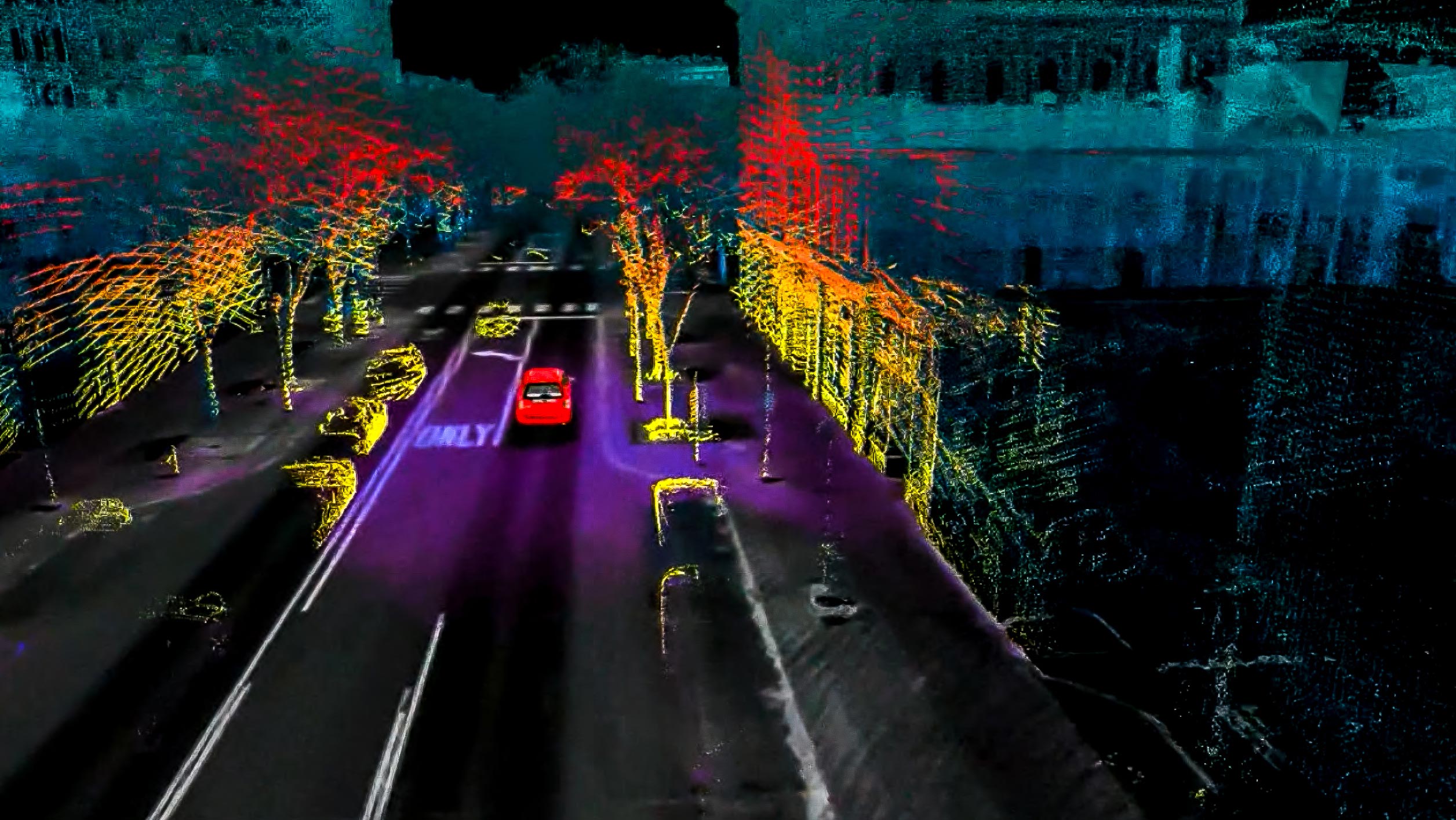

To advance this technology, Pandey is developing a software framework for crowd-sourced 3D map generation and visual localization from camera data. The crowd-sourced data will come from the cameras and sensors of Ford vehicles already on the road, leveraging information that is automatically being collected instead of employing specialized mapping cars that travel around to gather data.

Pandey will use the crowd-sourced data to create new mapping capabilities that allow real-time updates and aid low-cost visual localization. The intent of this technology is to improve autonomous driving and, ultimately, put fully self-driving vehicles on the road.

The Society of Automotive Engineers defines six levels of autonomy, from none at level zero to full at level five. Many of today’s new vehicles are level two, offering driver assistance such as self-parking and speed control. Currently sold by only one company, level three vehicles allow the driver to remove their hands from the steering wheel while remaining ready to assist if needed. Level four needs no driver interaction except in some weather conditions. The eventual goal is level five, at which no human intervention is necessary.

Predictions vary regarding when level four and five AVs will be available for consumer purchase. While several companies are testing level four AVs on public roads in the U.S., only a few have them in any form of service. Currently their use is limited to driverless taxis in limited geographic areas within large cities. Waymo, owned by Google’s Alphabet, operates AV taxis in the San Francisco Bay Area, Los Angeles County and Metro Phoenix. Amazon’s Zoox carried its first passengers in California last year and announced it will begin testing its robotaxis in Austin and Miami. Other companies are in the testing phase of their driverless taxis.

Level four and five vehicles are expected to increase safety for riders, surrounding vehicles, pedestrians and cyclists by eliminating human error. They will give their riders free time to work or relax. As a licensed driver will not be required, they may be of great benefit to those with disabilities that make driving difficult or impossible.

Pandey brings over 10 years of experience in AV research to the goal of making self-driving vehicles a common reality in our world. He says we are already witnessing the use of AVs in various controlled environments, such as in mining and ports, in addition to the AV taxis. For him, it is no longer a question of "if" we will see full automation integrated into all aspects of our lives, but rather "when" it will be implemented at scale.