Two primary areas within machine learning are supervised learning and reinforcement learning. Most popular machine learning successes are in supervised learning, with applications such as image recognition through your smartphone or Facebook or the ability to interact with Siri or Google Home.

Reinforcement learning is a class of machine learning that addresses the problem of learning to control systems in an unknown and evolving environment. Reinforcement learning had some shining successes in the past few years, such as the celebrated AlphaGo algorithm from DeepMind, which was able to outperform human players in complicated games such as Go, Chess and Shogi. Reinforcement learning has been considered as the enabling technology for achieving our transformation to a future with autonomous robots and self-driving cars. However, apart from the supervised learning applications that are a part of everyday life, successful reinforcement learning tools, though they exist, are less common.

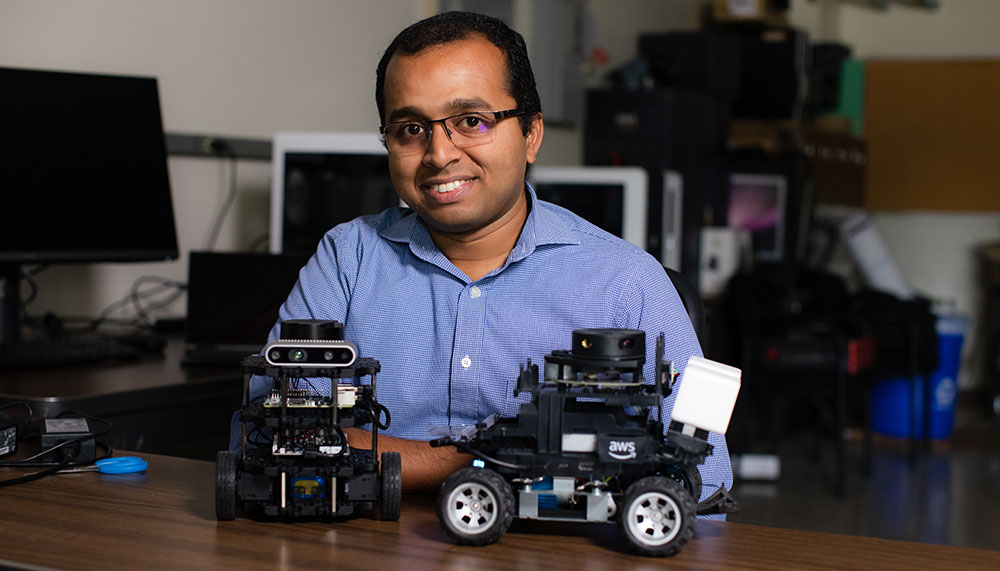

Dr. Dileep Kalathil, assistant professor in the Department of Electrical and Computer Engineering at Texas A&M University, is investigating how reinforcement learning can be successfully integrated into more aspects of daily life by examining three primary elements – the robustness, safety and adaptivity of the algorithms.

It’s very important that algorithms, in general, should have a robustness and safety guarantee.

The successes within reinforcement learning are limited to very structured or simulated settings, such as simple games or simple robotic tasks. Current algorithms struggle to achieve desired outcomes when applied to real-world settings. This is known as the simulation-to-reality gap, and it is one of the most significant challenges currently preventing researchers from successfully deploying reinforcement learning algorithm-enabled robots or systems in everyday life.

“It’s very important that algorithms, in general, should have a robustness and safety guarantee,” Kalathil said. “And it’s even more important in reinforcement learning because these algorithms deal with real-world engineering systems, such as cars, power systems and communication networks.”

Kalathil is studying multiple aspects of reinforcement learning, providing a complete picture of the gaps in this area and the space to address them innovatively. Hence, he is focusing on robust reinforcement learning, safe reinforcement learning and meta-reinforcement learning.

The goal of robust reinforcement learning is to learn a policy that is robust against uncertainty, meaning that the algorithm can cope no matter what unforeseen events happen in a model or real-world environment. The reinforcement learning algorithms focus on developing control policies that maintain the necessary safety constraints of the real-world systems, such as maintaining voltage variations in the power systems.

Meta-reinforcement learning is an approach where the experience gained from solving a single task or a variety of tasks is refined into a meta-policy allowing the system to seamlessly perform a new, related task. Kalathil’s research focuses on developing novel reinforcement learning algorithms that use these frameworks to overcome the challenges due to the simulation to reality gap, and thus enable successful reinforcement learning algorithm-based technologies for the autonomous future.

Kalathil, in collaboration with his students and colleagues, published four papers on these topics in the Proceedings of Neural Information Processing Systems, one of the most prestigious conferences in the area of machine learning, in December 2022. He has also previously received the Faculty Early Career Development Award from the National Science Foundation for work in this area.