Emergency response workers are in dangerous situations every day, but technology can facilitate safer work environments and protect workers from danger.

Researchers at Texas A&M University, the Texas A&M Engineering Experiment Station, Virginia Tech and the University of Florida are working to accelerate the use of new technologies in emergency response by developing an adaptive personalized mixed-reality learning platform that empowers first responders to perform their jobs more safely and effectively.

Dr. Ranjana Mehta, co-lead and associate professor in industrial engineering, along with her collaborators, have been awarded a $1 million National Science Foundation Convergence Accelerator grant to create technology-based solutions supporting emergency response worker safety and performance on the job. This award focuses on one of the NSF’s 10 Big Ideas in the Future of Work category at the Human-Technology Frontier.

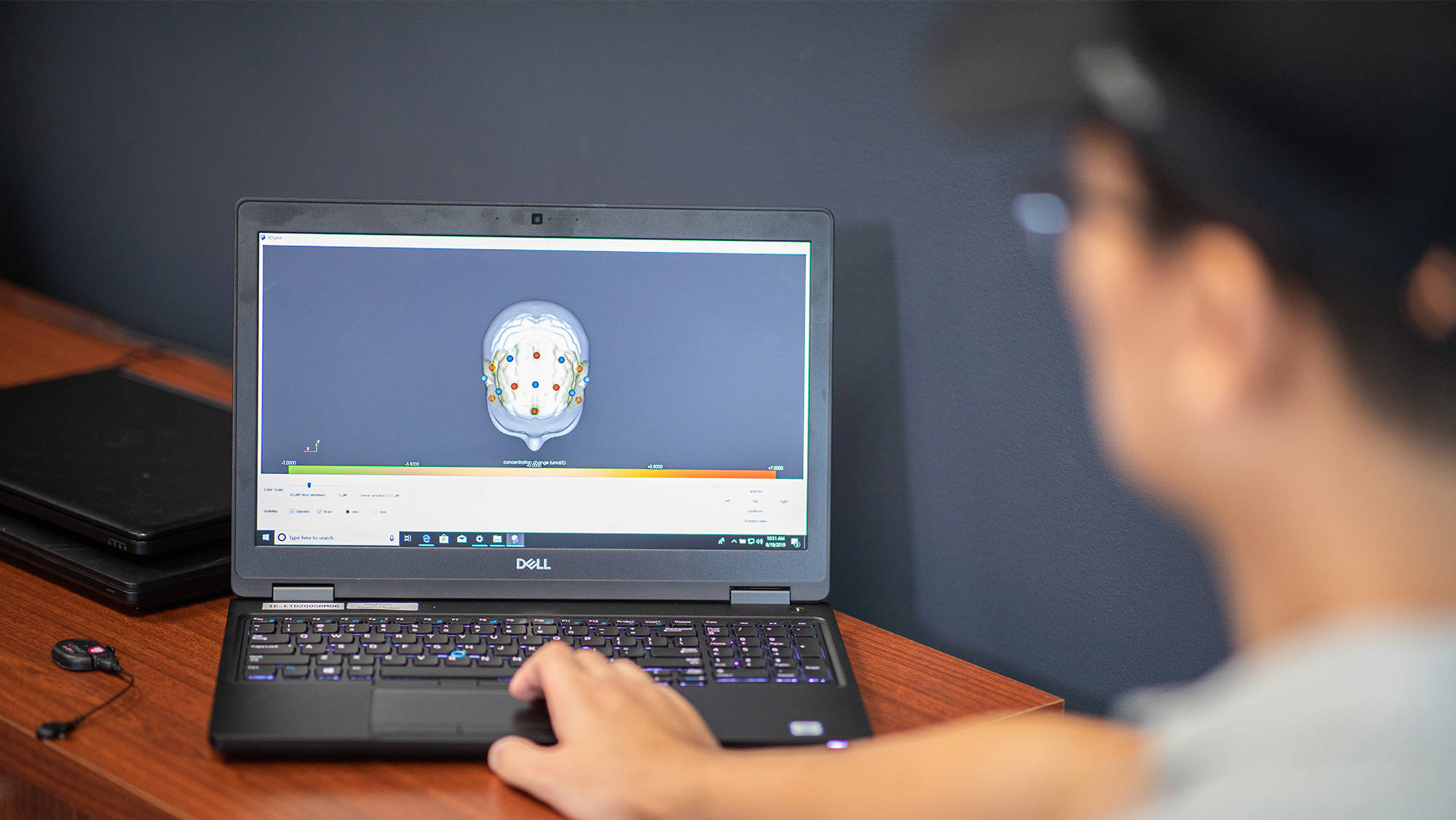

Their project, “Learning Environments with Advanced Robotics for Next-Generation Emergency Responders (LEARNER),” focuses on integrating innovative robotics and augmented/virtual reality (AR/VR) technologies into emergency response work through use-inspired technology development, a mixed-reality learning platform and the creation of an open source knowledge sharing platform, which will help share the information learned over the duration of the project.

The research aims to create technology, in close partnership with industry and public safety stakeholders, that is centered around the emergency response worker, specific for the situation and adapted for a particular use. This research will use three different technologies, ground robots, exoskeletons (or wearable robots) and AR, to accomplish the research goals.

“Ground robots could aid (emergency response) workers during surveillance and search and rescue operations by going into areas humans cannot safely access,” said Mehta. “Powered exoskeletons allow workers to enhance their physical capacity, while still maintaining autonomy from the machine. AR allows (emergency response) workers to increase their teamwork skills, including wayfinding, collaboration and decision making during a stressful situation, successfully operate robotic machinery in the field, and learn new skills safely.”

She said emergency response workers are in need of near constant training to reinforce current strategies and tactics, and develop the knowledge, skills and abilities they need to handle the next emergency. The technology generated through this research will help address the worker’s requirement for constant learning, and reduce the barriers to technology acceptance and adoption.

This project is currently in phase one for nine months, and will have the opportunity to apply for phase two funding next year. Phase two would provide additional funding for two years.

Mehta will collaborate with Texas A&M researchers, Dr. Jason Moats and Dr. Robin Murphy, along with researchers in the Cognitive Engineering for Novel Technologies Lab, Occupational Ergonomics and Biomechanics Lab and Terrestrial Robotics Engineering and Controls Lab lab at Virginia Tech and with Dr. Jing Du of the University of Florida during phase one. Industrial partners include Imaginate, Ekso Bionics and the Florida Institute for Human and Machine Cognition.