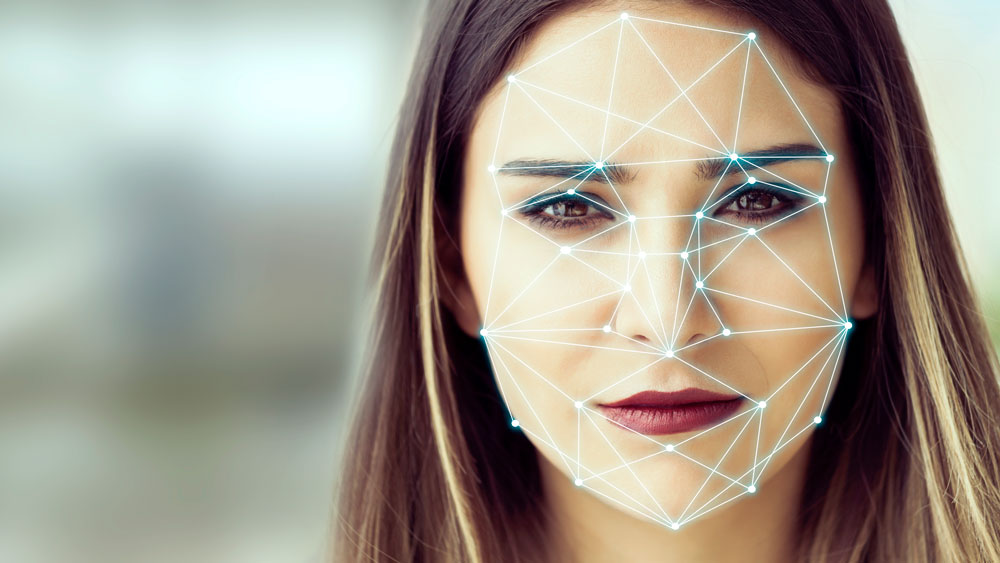

From Snapchat photo filters to Super Bowl crowd surveillance to identity verification in airports, facial analysis techniques have taken the industry by storm. With a multitude of applications, this branch of artificial intelligence is ever evolving – encompassing image tagging on social media, expression recognition, security, marketing, robotics and more.

Bridging industry and education, a team of researchers led by Dr. Zhangyang “Atlas” Wang, assistant professor in the Department of Computer Science and Engineering at Texas A&M University, is collaborating with MoodMe to improve the algorithms used in the company’s facial analysis and recognition programs.

The team, which includes graduate students Ziyu Jiang and Jiayi Shen, as well as undergraduates Daniel Ajisafe and Geeth Tunuguntla, will focus specifically on the techniques used to re-identify participants in video conferences to measure their emotions and attentiveness.

Wang’s group conducts state-of-the-art research in the fields of machine learning and computer vision. Their research achievements have generated international attention, as demonstrated by their large number of top-tier publications, international competition awards and partnerships with industry leaders.

“This collaboration provides us with a unique opportunity to tackle a real-world challenge, where a solution is highly demanded, based on our existing strength in computer vision and machine learning,” said Wang.

As Wang explained, the Texas A&M team will develop algorithms that can be used to recognize video conference participants across multiple sessions and dates, despite changes in attire, hairstyles, lighting, seating arrangement, etc. By adopting deep learning into their design, the researchers are not only improving already state-of-the-art algorithms in human facial analysis, but also advancing the ability for artificial intelligence to interpret the meaning behind facial expressions.

While artificial intelligence and computers cannot see faces like people can, they are able to break images into pixels – comparing and contrasting each section to recognize key facial features, such as eyes and mouth. These pieces are then cross-referenced against a vast database of known image examples to determine what their expression might mean.

This gives companies the ability to measure emotional responses and attentiveness to a message and a presentation or product in real time during video conferences – giving them valuable insight into their client base, staff and prospective customers.

“We are excited by the chance to work with a leading company like MoodMe and to make broader impacts on the field of computer vision and deep learning,” said Wang.

An international leader in facial recognition and insight, MoodMe applies deep learning to create embedded software components that provide facial insights and augmented reality face filters. MoodMe helps companies engage consumers and analyze their experiences while respecting their privacy.

MoodMe has previously worked with AT&T, FIFA, Stanford University, Final Four, Nina Ricci, Body World and Gucci. Applications made with MoodMe face software allow users to virtually try on different sunglasses or cosmetics as well as measure the emotional response of an audience. The company is advancing the research and development of real-world artificial intelligence, neural networks and facial recognition.

“Privacy is at the center of all our work, both in research and in product engineering,” said Chandra de Keyser, CEO of MoodMe. “All the insights we gather from faces fully respect people’s privacy – no faces are stored ever, nor sent to the Cloud. With all the promises of internet giants, there is still no “one-click” to delete all our pictures or data that they have accumulated about us. The focus of our research and engineering is to reach the highest performances and precision on edge computing platforms like smartphones, embedded/internet of things, robots and desktop computers.”

He went on to say that he was excited to work with Texas A&M and Wang’s team for their top-notch academic and research talent, friendly and open-minded attitudes, and the dynamic “startup like” execution of the project.

“We hope to learn a lot and bring our products to the next level (through this project),” said de Keyser. “While our biggest market is the United States, our strategy is to innovate on both sides of the Atlantic. We were seeking a research and development partner in the U.S. to accelerate our innovation and go to market cycles with a focus on algorithms, which provide face insights such as emotion detection and identification. Our meeting with Atlas was excellent, a perfect match of interests and, just as important, a fast response time.”