A big problem for autonomous robots is the challenge of navigating large environments that are void of a global positioning system (GPS). Saurav Agarwal, doctoral student, and Dr. Suman Chakravorty, associate professor, from the Department of Aerospace Engineering and The Estimation Decision and Planning Lab at Texas A&M University have developed an indoor mapping technology that allows autonomous robots to be deployed in extremely large-scale environments (e.g., Amazon warehouses that are millions of square feet in size) at an affordable cost.

Agarwal and Chakravorty discovered a major gap in automation for warehousing and logistics, and received a $50,000 Innovation Corps grant (I-Corps) from the National Science Foundation to research commercial needs for their technology. Their goal for the research was to develop tools and methods to enable robust long-term autonomy for mobile robots.

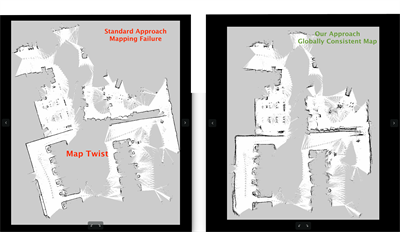

This I-Corps project is a result of research into the problem of Simultaneous Localization and Mapping (SLAM). In SLAM, a robot is not given prior knowledge of its environment; it must use its sensory data and actions to simultaneously build a map of its environment and position itself within its uncertain map. Competing methods in this area exhibit positioning errors that may be unsuitable for long-term navigation.

This I-Corps project is a result of research into the problem of Simultaneous Localization and Mapping (SLAM). In SLAM, a robot is not given prior knowledge of its environment; it must use its sensory data and actions to simultaneously build a map of its environment and position itself within its uncertain map. Competing methods in this area exhibit positioning errors that may be unsuitable for long-term navigation.

Agarwal’s and Chakravorty’s mapping technology achieves three times more robustness in real-world and simulated tests compared to existing state-of-the-art technology. They have shown that their algorithms allow robots to map environments in cases where openly available mapping technology fails.

An example of robots that navigate in indoor environments are material-handling robots that move goods (boxes, pallets, etc.) in large warehouses and distribution centers. Using the mapping technology they’ve developed enables robots to navigate safely and reliably, thereby reducing labor costs for warehouse operators and reducing injuries to workers caused by human drivers of forklifts. This will, in turn, enable cheaper and faster access to goods for consumers.

The broader impact/commercial potential of this I-Corps project is to develop autonomous navigation technology that will enable systems to robustly operate in uncertain environments without GPS. Commercialization of this technology has the potential to revolutionize space exploration, self-driving cars, unmanned aerial vehicles (UAVs) and other such systems that need accurate position estimation.

The broader impact/commercial potential of this I-Corps project is to develop autonomous navigation technology that will enable systems to robustly operate in uncertain environments without GPS. Commercialization of this technology has the potential to revolutionize space exploration, self-driving cars, unmanned aerial vehicles (UAVs) and other such systems that need accurate position estimation.

A key advantage of this project's technology is enhanced cybersecurity as it does not rely on external signals for navigation. Further, this project will contribute open-source software to the scientific community. It is envisioned that development of a software toolbox that integrates with the popular ROS (Robot Operating System) library will allow researchers to simulate autonomous navigation without GPS.

In less than a year, Agarwal and Chakravorty have taken the idea from a mathematical concept to a proof-of-concept robot running in a live warehouse. They have also shown through virtual reality simulations that their mapping and navigation technology is three times more robust (99.99% success rate in testing) than openly available technology. The technology has never failed in practice, whereas competing technologies fail 50-60% of the time. Agarwal says, “We believe our navigation technology can revolutionize the field of autonomous robotics and speed up the time-to-market for safe and reliable commercial robots.”

The main research challenge for Agarwal and Chakravorty is deploying this technology at scale in real-world warehouses, factories and distribution centers. They are exploring opportunities with the Autonomous Systems Lab under Dr. Srikanth Saripalli, associate professor in the Department of Mechanical Engineering at Texas A&M, to deploy this technology on a real-world forklift.

Two National Science Foundation projects were instrumental in the development of this technology: the projects studied robust estimation and motion planning under uncertainty, paving the way for the robust autonomy solution developed by the two researchers.